I recently attended the 2019 AWS Summit held in Sydney, that saw over 20,000 attendees across the 3 days. I’ve been along to the Sydney event twice before, and each year AWS just keeps on growing, continually adding to the event.

This year AWS introduced the DeepRacer League, the world’s first global autonomous racing league. The challenge is to develop an algorithm that enables a small (1/18th scale) car to move itself around a racetrack. Competitors are required to use Machine Learning to train the car to drive around the track as fast as possible.

A few of my GJI colleges (Alan Blockley, Nick Perkins & Anthony Grant) and I attended the summit and initially had no intention of spending so much time working on, or participating in the DeepRacer League. However, after attending the first workshop we were hooked, determined to continually improve our reward functions.

What is DeepRacer?

The League was kicked off in 2018 at AWS’s largest conference, ReInvent. Since then, the competition has been touring to each local summit held around the world. The competitor with the fastest time at each local event wins a trip to ReInvent 2019, to compete in a final knockout tournament.

In addition to the ‘in person’ events held at the Summits, there is also a virtual league with a number races taking place over the year. Open to the public, the winner of each of these virtual events will also win a Trip to ReInvent in 2019, along with the overall top 20 place holders.

Machine Learning!?

There are three types of Machine Learning:

-

Supervised learning is example-driven training. Data is labeled, like marking out the edges of a race track. This creates known outputs for given inputs, the model is then trained to predict output for new inputs.

-

Unsupervised learning is similar supervised learning, however the data is not labeled. The model is trained to find patterns in the input data, this is also known as Inference-based training.

-

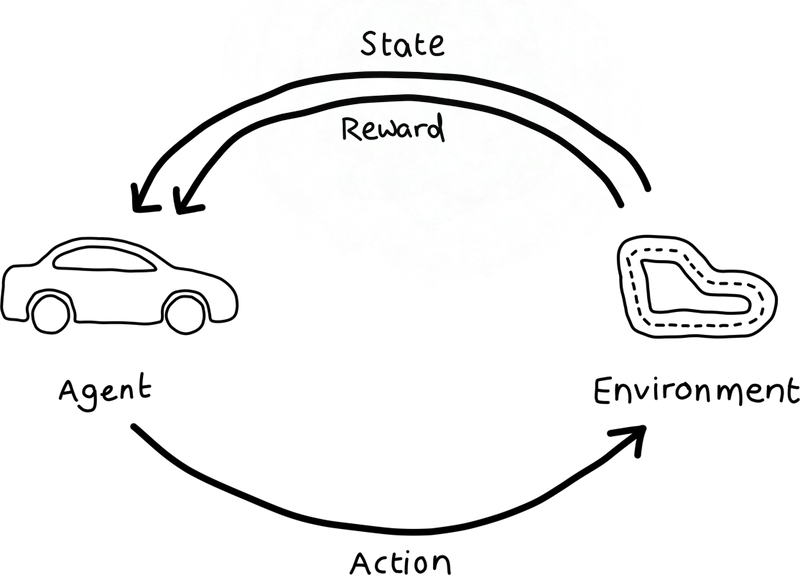

Reinforcement Learning, or RL, differs significantly from Supervised and Unsupervised learning. It doesn’t work with any pre-existing input data, instead working on trial and error. This generates feedback from a reward function to identify which actions lead to the best rewards. This type of Machine Learning is what DeepRacer uses.

So how does it work?

The DeepRacer console primarily uses two existing AWS services behind the scenes - Sagemaker & Robomaker.

Reinforcement learning works by creating a reward function to encourage behaviour that results in staying on the track, as well as finding the most efficient path around the track.

DeepRacer provides a number of parameters you can use to create the reward function with, you can find them here

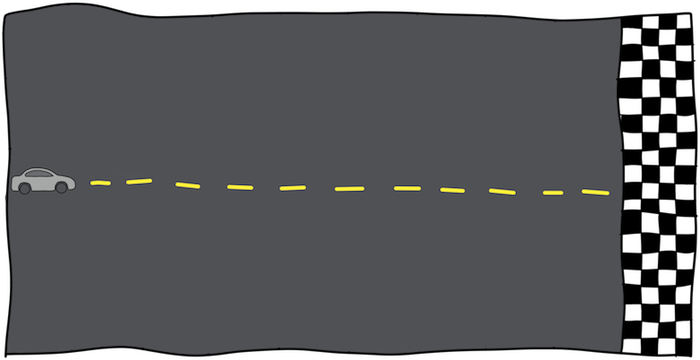

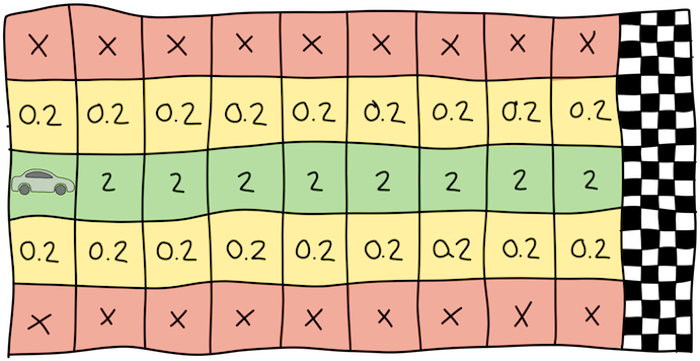

An example of a reward function is this; Imagine the track is a straight line, and you want to encourage the car to take the fastest route to the finish line.

In this example, you could give the largest reward for being closest to the center line, and diminishing rewards the further away the car is.

Through training, the simulation will aim to maximize the reward, which eventually will encourage the car to drive straight to the finish line.

About the race

Day 1

Arriving early to the DeepRacer track I took advantage of no queues and tried one of the default supplied models to test out a DeepRacer on the track. After coming off the track almost every lap I was surprised to find I even set a lap time (you can come off the track 4 times in a lap before it’s invalidated). Only 3 people had raced at this point, so I quickly took a photo of myself at the top of the leaderboard, not expecting to stay in first place for long…

After testing the track we decided to check out the DeepRacer workshop. We had been trying to obtain a DeepRacer for months but had been unsuccessful due to ship dates being pushed back. Seeing one in person, along with the ability to ask questions about how the training works was a great experience.

During the workshop I heard over the PA system that a new lap time of 12.x seconds was set, by the time the workshop had finished I was barely in the top 10.

Throughout the rest of the day we tried a couple more models we’d started training during the workshop. By the end of day 1, several members of the GJI team were in the top 10, including Alan in 3rd place with a 10.9 second time.

That night instead of going out for dinner and drinks like any other conference, we stayed at the hotel and hosted our own hackathon. We attempted to learn as much as possible about reinforcement learning, and how to optimise our reward functions.

I stumbled onto the Winner of the French DeepRacer event, Matthieu’s post and decided to shoot him a message, asking for some tips he could share. Much to my surprise, a couple of hours later I received an email from him, outlining some places to start (Thanks Matthieu!).

After sharing our learnings and training several models each, we decided to call it a night around 1am after kicking one last training job each to run whilst we slept.

Day 2

Going into the second day, we all decided that the aim was to stay in the top 10 so we could win a DeepRacer for the office.

As soon as the Keynote was over, we raced back up to the DeepRacer track to try our models from the night before.

Alan was up first, and since the previous day, he had iterated on his model ~10 times. Unfortunately the model may’ve overtrained and resulted in a 16 second lap time, worse than his attempt the day before.

I was up next, trying one of the very last models created the day before, but trained slightly longer overnight. I don’t know if it was the model, a fully charged DeepRacer, luck, or a combination of all 3, but the first lap it ran was blisteringly fast. So fast that I put down the tablet and said “What was the time!?, no way I can do any better”.

Because the DeepRacer took off so fast on its first lap, the time recorder needed to confirm that it did in-fact remain on the track as he didn’t see the whole lap. Whilst the officials were deliberating, I was instructed to make use of the 2 or so minutes I had left on the track, amazingly I set another excellent lap time which later turned out to be only 200ms slower than my initial run.

The rest of the day consisted of some interviews about DeepRacer, as well as attending some more sessions at the summit.

Racing was set to finish at 3:45pm that day, so at 3pm we headed back to the track and to my surprise I was still at the top of the leaderboard with 8.29s. Nick Perkins was in 5th place with a time of 10.64s and Alan Blockley in 6th with 10.7s.

At 3:43pm the competitor in 2nd place squeezed one last run in. After waiting for what felt like hours for his 4min session to finish, it was all over. I was ushered over to the podium and was prepped for the ceremony, still in disbelief I won.

I can’t thank Alan Blockley, Nick Perkins & Anthony Grant enough, I am certain I wouldn’t have won without the contributions and teamwork they were apart of.

Tips for DeepRacer

I thought I’d mention a couple of things I wish I knew earlier on in the event, that may’ve made more of a difference:

-

Get on the track There’s no limit to how many times you can try your models on the track, as early as possible, get out on the track and get a feel for how it all works, it helped me connect some dots when creating the function and running the simulation.

-

Don’t overtrain models AWS does mention the issues with sim2real here, as the real world track is not identical to the simulated environment, training a model for too long will decrease it’s ability to adapt to environment variables. I found no less than 1hr and no more than 4hrs was a good area to start training models.

-

Speed When creating a training model, you can specify maximum m/s in the Action Space. This does not directly translate to the real world. When you run your model on a DeepRacer, you’re given a tablet with with a web browser that’s connected to the DeepRacer.

This is how you select your model and control speed based on throttle %. The throttle is dependent on the amount of battery charge the DeepRacer has. For me, it varied between 30%-55% to get it around the track at a good pace, I can’t imagine how fast it is at 100%

Next step is ReInvent 2019 in Las Vegas, if you’re competing in the virtual league or just playing around in the DeepRacer console and have and questions / issues, feel free to shoot me a message.